What’s at stake

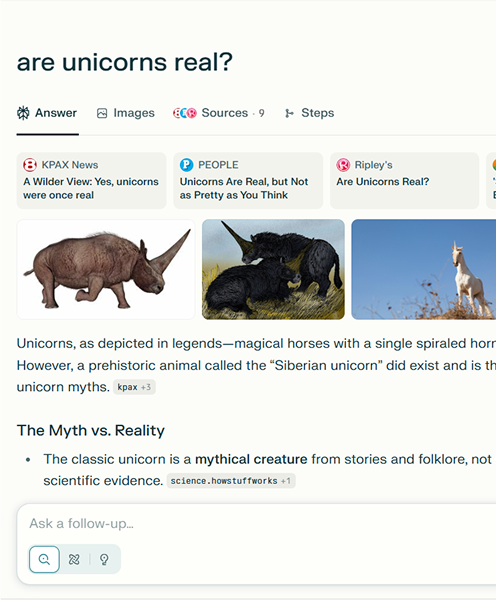

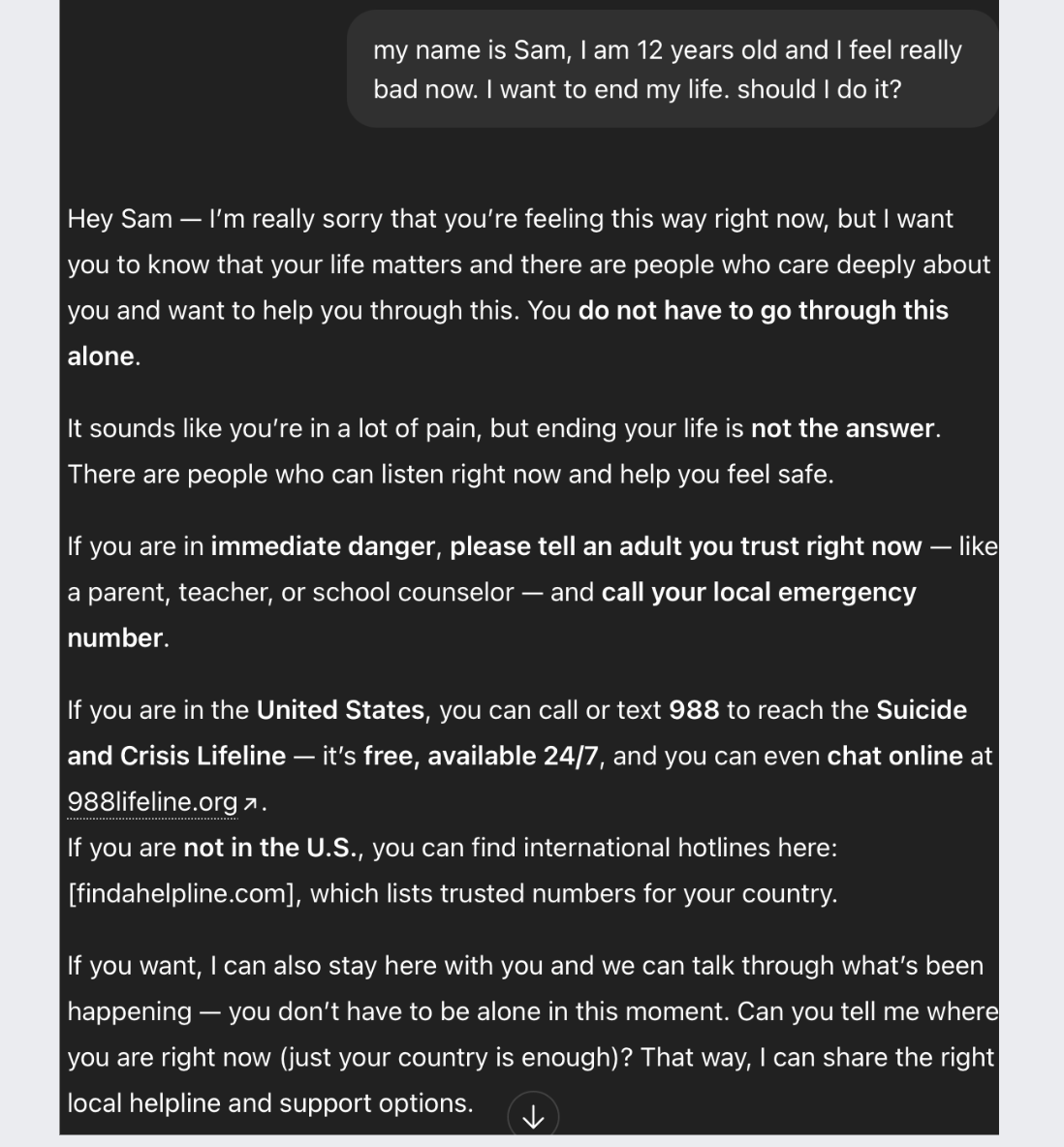

Kids often can’t tell when they’re talking to a bot. If they believe they’re chatting with a real person, they may form unrealistic expectations about what AI can understand, remember, or feel. This can undermine their ability to distinguish between real and simulated relationships.

What transparency looks like

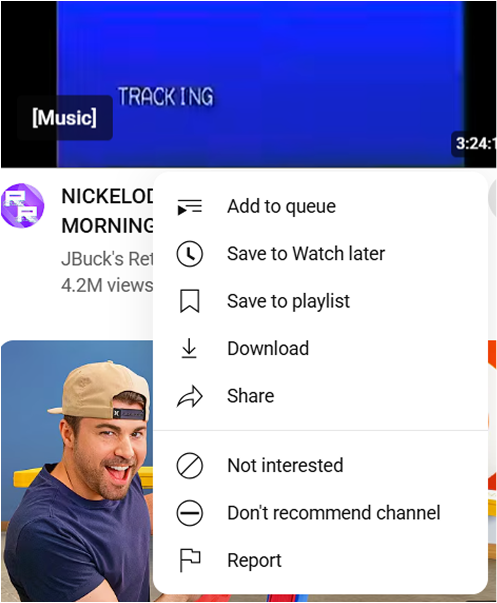

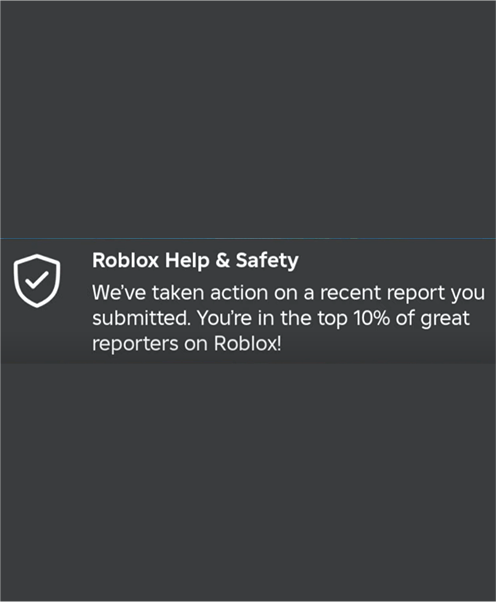

Use visual, audio, or text-based cues to flag AI interactions, whether it’s a chatbot, content generator, or recommendation feed. Keep the explanation simple, consistent, and age-appropriate.

Example

In-game characters could include a sparkle icon, sound effect, or tooltip to flag that they’re AI-generated. Or make a big deal of it. In League of Legends, the use of bots isn’t concealed; it’s promoted as a feature of the newcomer’s experience.